准备工作

Kafka是没有一个官方的镜像的,但是有一个star比较高的个人镜像,是wurstmeister/kafka,更新的也比较频繁,所以我们就选择这个镜像:docker pull wurstmeister/kafka。而kafka启动依赖zookeeper,于是我们还需要docker pull digitalwonderland/zookeeper,然后把他俩都上传到自建的harbor仓库里。

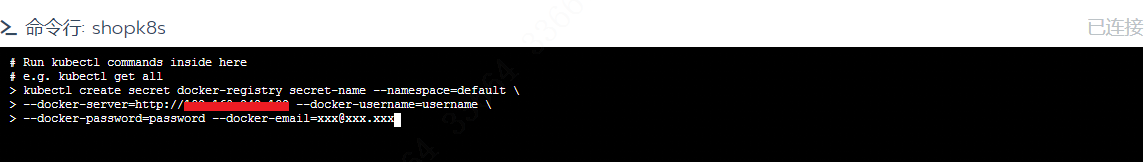

在部署的时候,rancher会去harbor里拉取镜像,但是harbor的私有仓是需要账号密码鉴权的,于是我们就要在rancher的界面点击集群—>执行kubectl命令行,然后输入对应的内容:

其中secret-name:secret的名称,namespace:命名空间,docker-server:Harbor仓库地址,docker-username:Harbor仓库登录账号,docker-password:Harbor仓库登录密码,docker-email:邮件地址。

执行之后,三个worker就应该可以登录到harbor仓库里了。

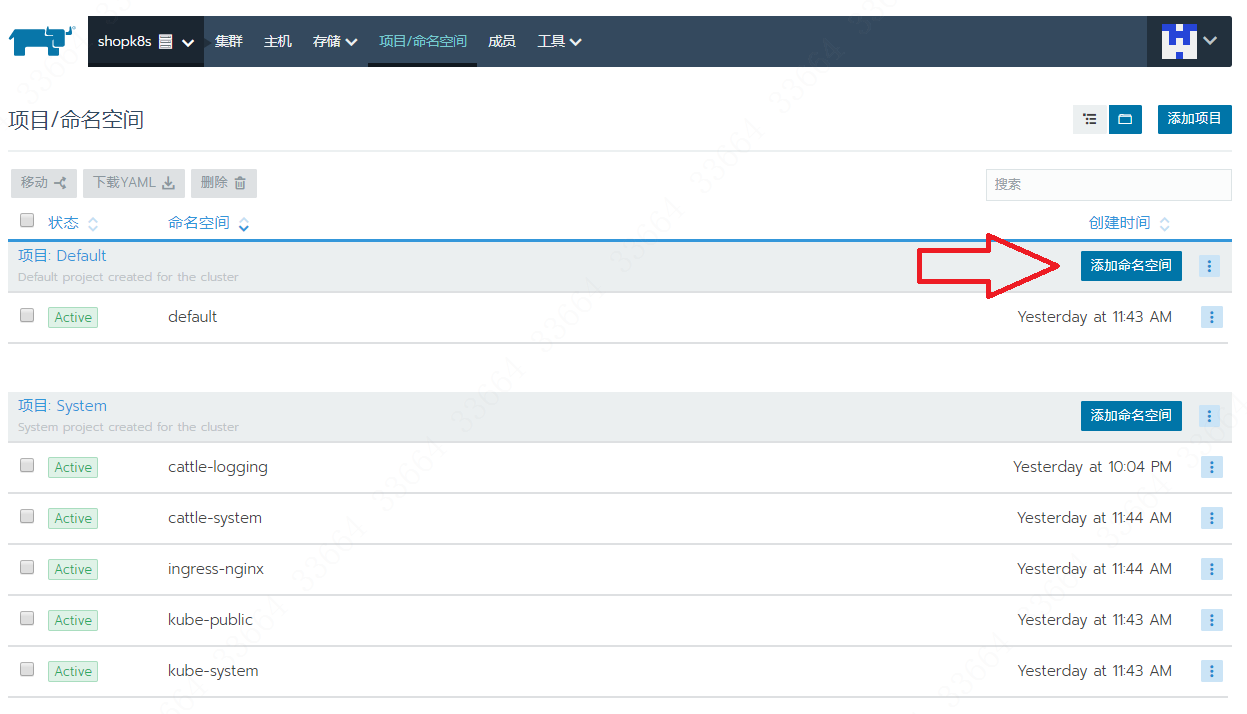

部署zookeeper

现在我们开始部署zookeeper,首先来到项目/命名空间,在Default这个项目里新建一个命名空间,比如叫shop。

然后点击集群的下拉菜单,选择default:

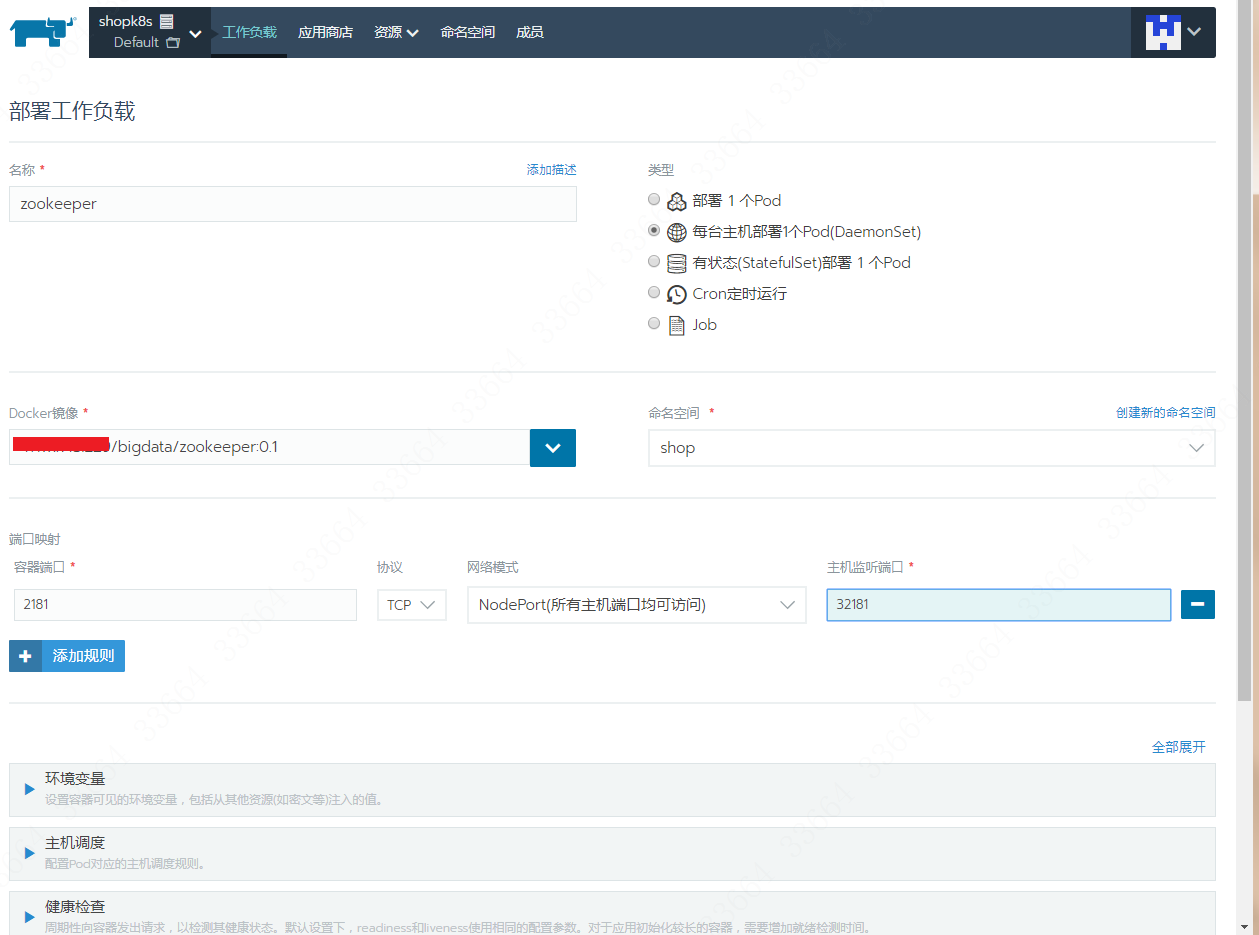

点击部署服务,填写一些基本资料:

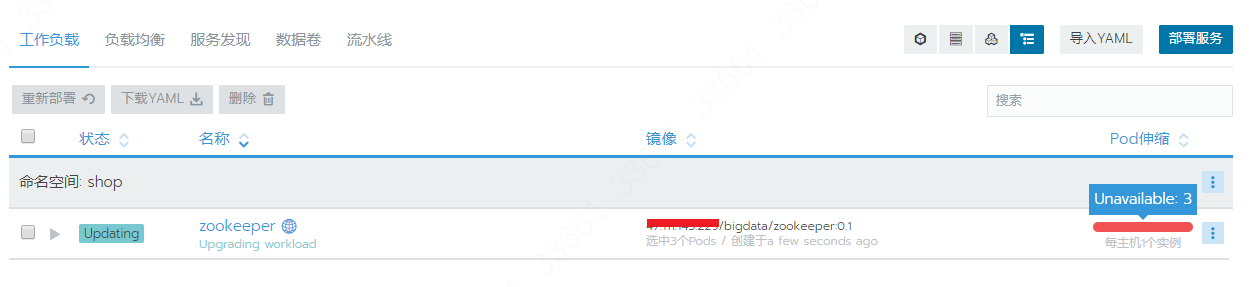

然后点击启动,就完事了!但是不要高兴太久,发现pod有错误,是处于Unavailable的状态:

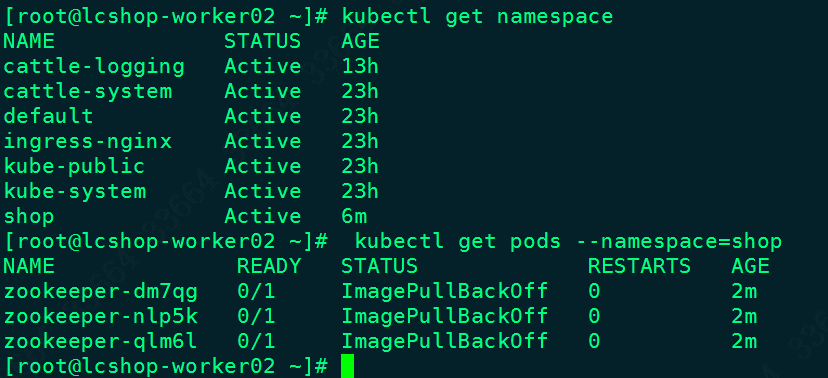

来到worker里,kubectl get pods --namespace=shop查看一下这几个pod的状态:

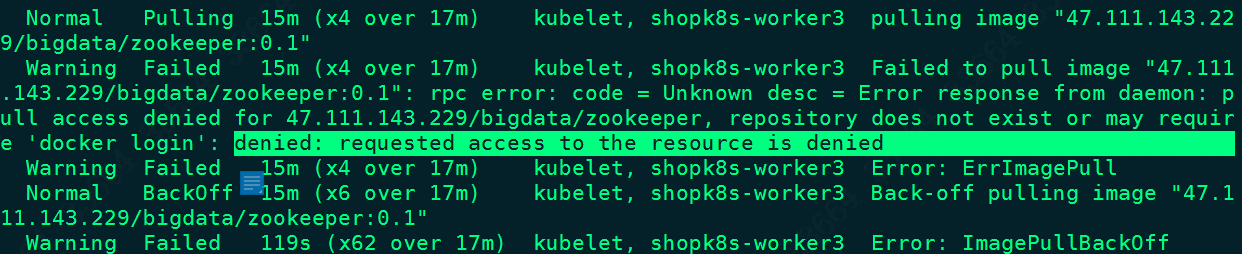

竟然是ImagePullBackOff?那么除了网络问题外,就是:镜像tag不正确、镜像不存在(或者是在另一个仓库)、Kubernetes没有权限去拉那个镜像。使用kubectl describe pod 对应pod名 --namespace=shop查看细节,果然被拒绝了:

后来发现犯了两个错误,第一我把secret的namespace写到了default这个namespace里,结果部署是在shop这个namespace里;第二使用kubectl get secret secret-name -o yaml以及echo "秘钥" | base64 --decode发现,我的harbor IP写错了,在secret里写的是内网IP,结果在部署的时候,镜像填写的是外网IP,更改过来之后,就OK了!

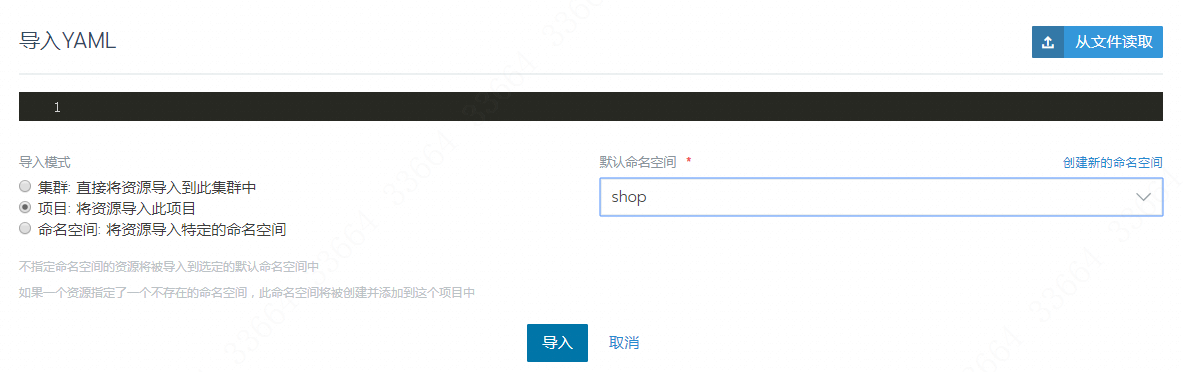

如果习惯使用yaml的方式去部署的话,可以直接在工作负载的地方点击导入yaml:

然后复制进如下的yaml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: zookeeper-deployment-1

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper-1

name: zookeeper-1

template:

metadata:

labels:

app: zookeeper-1

name: zookeeper-1

spec:

containers:

- name: zoo1

image: digitalwonderland/zookeeper

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2181

env:

- name: ZOOKEEPER_ID

value: "1"

- name: ZOOKEEPER_SERVER_1

value: zoo1

- name: ZOOKEEPER_SERVER_2

value: zoo2

- name: ZOOKEEPER_SERVER_3

value: zoo3

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: zookeeper-deployment-2

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper-2

name: zookeeper-2

template:

metadata:

labels:

app: zookeeper-2

name: zookeeper-2

spec:

containers:

- name: zoo2

image: digitalwonderland/zookeeper

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2181

env:

- name: ZOOKEEPER_ID

value: "2"

- name: ZOOKEEPER_SERVER_1

value: zoo1

- name: ZOOKEEPER_SERVER_2

value: zoo2

- name: ZOOKEEPER_SERVER_3

value: zoo3

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: zookeeper-deployment-3

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper-3

name: zookeeper-3

template:

metadata:

labels:

app: zookeeper-3

name: zookeeper-3

spec:

containers:

- name: zoo3

image: digitalwonderland/zookeeper

imagePullPolicy: IfNotPresent

ports:

- containerPort: 2181

env:

- name: ZOOKEEPER_ID

value: "3"

- name: ZOOKEEPER_SERVER_1

value: zoo1

- name: ZOOKEEPER_SERVER_2

value: zoo2

- name: ZOOKEEPER_SERVER_3

value: zoo3

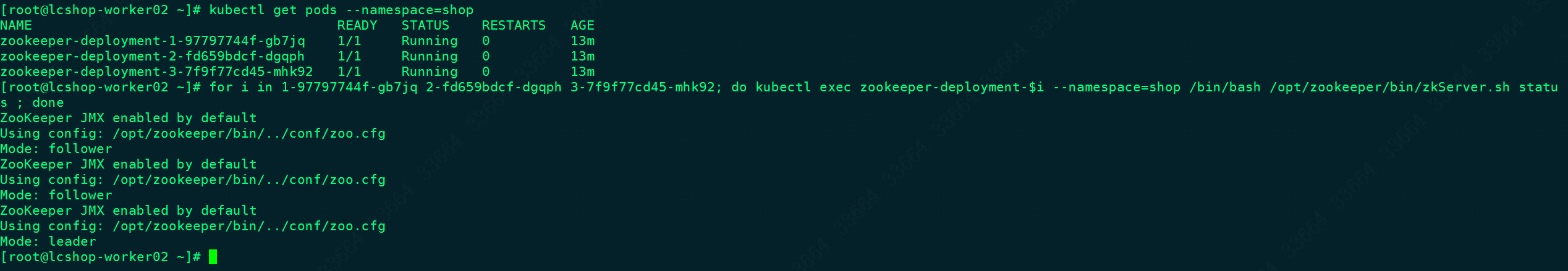

点击提交之后,上面三个deployment就生成了,去任意的一台worker服务器里使用for i in pod的名称后缀; do kubectl exec zookeeper-$i --namespace=shop /bin/bash /opt/zookeeper/bin/zkServer.sh status ; done获得结果如下:

zk的角色都已经分配好了,至此zookeeper集群搭建完毕!

部署kafka

还是在这个集群里,点击上面菜单栏的服务发现,再点击旁边的导入yaml,复制粘贴如下的yaml内容:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50apiVersion: v1

kind: Service

metadata:

name: kafka-service-1

labels:

app: kafka-service-1

spec:

type: NodePort

ports:

- port: 9092

name: kafka-service-1

targetPort: 9092

nodePort: 30901

protocol: TCP

selector:

app: kafka-service-1

apiVersion: v1

kind: Service

metadata:

name: kafka-service-2

labels:

app: kafka-service-2

spec:

type: NodePort

ports:

- port: 9092

name: kafka-service-2

targetPort: 9092

nodePort: 30902

protocol: TCP

selector:

app: kafka-service-2

apiVersion: v1

kind: Service

metadata:

name: kafka-service-3

labels:

app: kafka-service-3

spec:

type: NodePort

ports:

- port: 9092

name: kafka-service-3

targetPort: 9092

nodePort: 30903

protocol: TCP

selector:

app: kafka-service-3

然后再返回工作负载里,同样进入导入yaml,输入如下yaml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: kafka-deployment-1

spec:

replicas: 1

selector:

matchLabels:

name: kafka-service-1

template:

metadata:

labels:

name: kafka-service-1

app: kafka-service-1

spec:

containers:

- name: kafka-1

image: wurstmeister/kafka

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9092

env:

- name: KAFKA_ADVERTISED_PORT

value: "9092"

- name: KAFKA_ADVERTISED_HOST_NAME

value: [kafka-service1的clusterIP]

- name: KAFKA_ZOOKEEPER_CONNECT

value: zoo1:2181,zoo2:2181,zoo3:2181

- name: KAFKA_BROKER_ID

value: "1"

- name: KAFKA_CREATE_TOPICS

value: mytopic:2:1

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: kafka-deployment-2

spec:

replicas: 1

selector:

matchLabels:

name: kafka-service-2

template:

metadata:

labels:

name: kafka-service-2

app: kafka-service-2

spec:

containers:

- name: kafka-2

image: wurstmeister/kafka

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9092

env:

- name: KAFKA_ADVERTISED_PORT

value: "9092"

- name: KAFKA_ADVERTISED_HOST_NAME

value: [kafka-service2的clusterIP]

- name: KAFKA_ZOOKEEPER_CONNECT

value: zoo1:2181,zoo2:2181,zoo3:2181

- name: KAFKA_BROKER_ID

value: "2"

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: kafka-deployment-3

spec:

replicas: 1

selector:

matchLabels:

name: kafka-service-3

template:

metadata:

labels:

name: kafka-service-3

app: kafka-service-3

spec:

containers:

- name: kafka-3

image: wurstmeister/kafka

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9092

env:

- name: KAFKA_ADVERTISED_PORT

value: "9092"

- name: KAFKA_ADVERTISED_HOST_NAME

value: [kafka-service3的clusterIP]

- name: KAFKA_ZOOKEEPER_CONNECT

value: zoo1:2181,zoo2:2181,zoo3:2181

- name: KAFKA_BROKER_ID

value: "3"

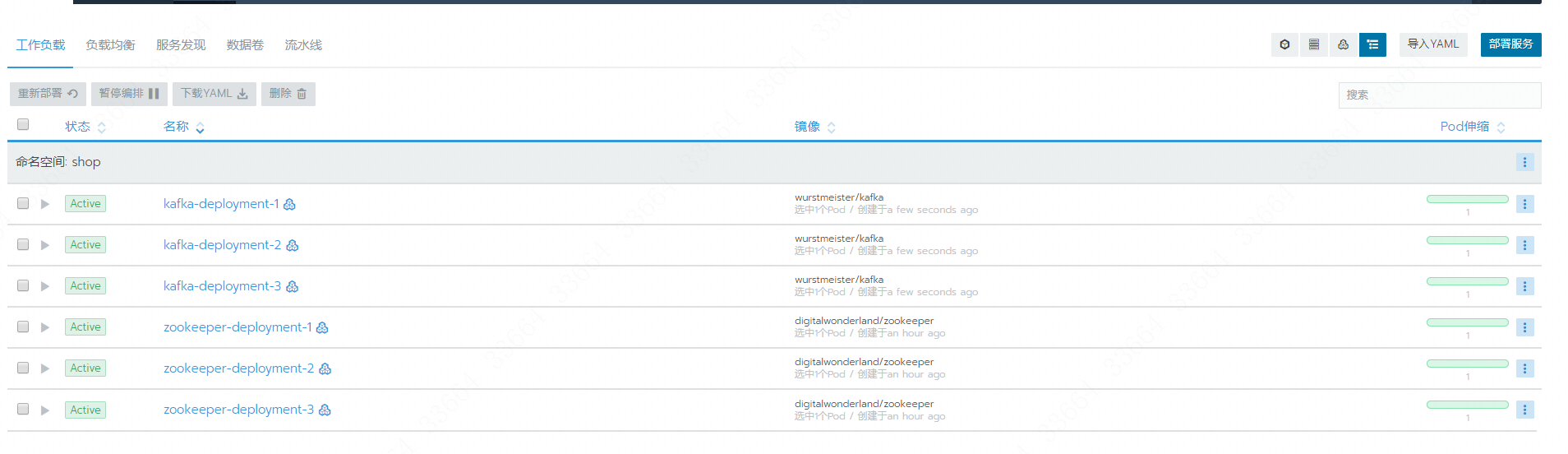

然后就会生成kafka了!我个人还是很喜欢用yaml去生成服务的。

如果发现导入yaml界面卡死了,极有可能是yaml格式有问题。点击取消修改错误再提交即可。

验证kafka

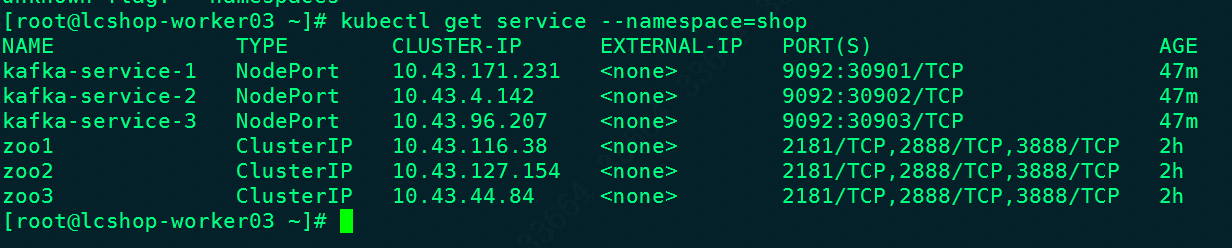

部署完了还要验证的,首先来到workers里,先使用kubectl get service --all-namespaces获取kafka的CLUSTER-IP。如下:

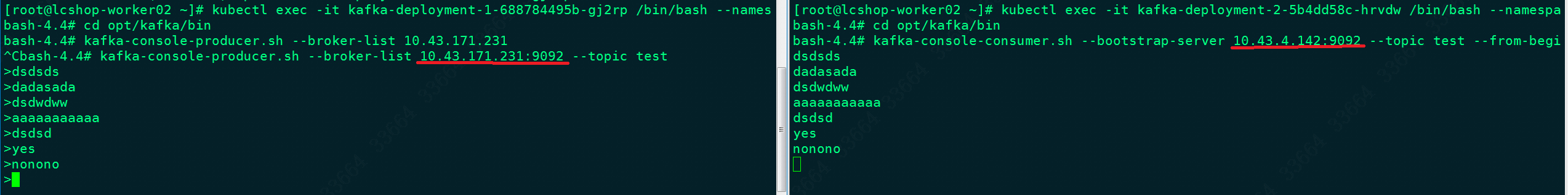

然后再kubectl exec -it kafkapod名称 /bin/bash --namespace=shop进入到跟刚才记录IP不一样的kafka里,先cd opt/kafka/bin,然后kafka-console-producer.sh --broker-list 任意kafka的CLUSTER-IP:9092 --topic test。此时打开另一个xshell窗口,同样随机进入一个kafka的pod里,也是到opt/kafka/bin下之后,执行kafka-console-consumer.sh --bootstrap-server 另一个kafka的CLUSTER-IP:9092 --topic test --from-beginning,此时在原先窗口里输入字符,可以在第二个窗口里看到,这样就算OK了!如图:

部署kafka-manager

开发又提出了需求,希望安装一个kafka的可视化插件,提高办公效率。于是我就选择了kafka-manager作为插件,yaml内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58apiVersion: v1

kind: Service

metadata:

name: kafka-manager

labels:

app: kafka-manager

spec:

type: NodePort

ports:

- name: kafka

port: 9000

targetPort: 9000

nodePort: 30900

selector:

app: kafka-manager

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-manager

labels:

app: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

app: kafka-manager

template:

metadata:

labels:

app: kafka-manager

spec:

containers:

- name: kafka-manager

image: zenko/kafka-manager:1.3.3.22

imagePullPolicy: IfNotPresent

ports:

- name: kafka-manager

containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: "[zookeeper的IP]:2181"

livenessProbe:

httpGet:

path: /api/health

port: kafka-manager

readinessProbe:

httpGet:

path: /api/health

port: kafka-manager

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 250m

memory: 256Mi

同样在工作负载—导入yaml里粘贴上面的yaml之后,执行一下就会看到kafka-manager的deployment生成了,如图:

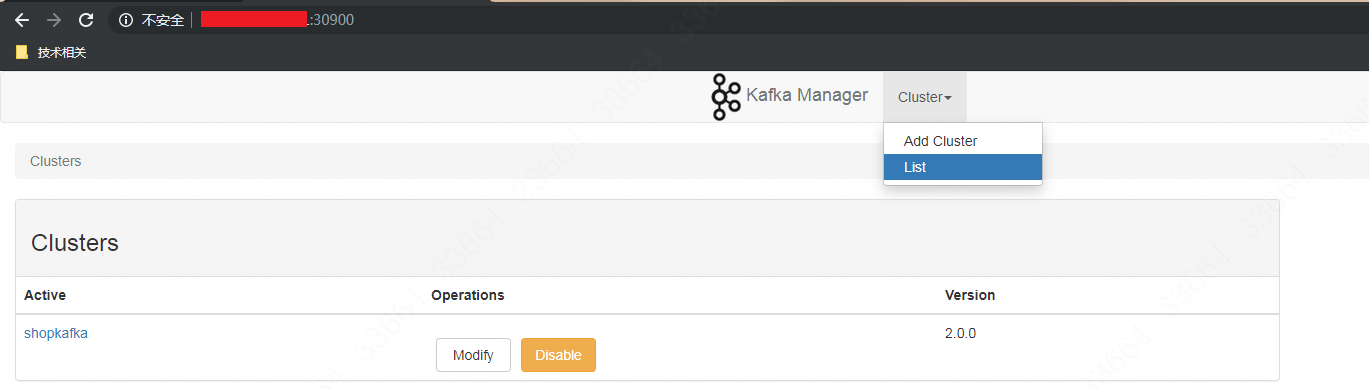

在阿里云SLB里配置对应的端口,然后在浏览器里就可以访问了,如图:

参考资料

https://o-my-chenjian.com/2017/04/11/Deploy-Kafka-And-ZP-With-K8s/

http://blog.yuandingit.com/2019/03/26/Practice-of-Business-Containerization-Transformation-(3)/

https://www.cnblogs.com/00986014w/p/9561901.html (文中yaml的作者)

https://k8smeetup.github.io/docs/tutorials/stateful-application/zookeeper/

http://www.mydlq.club/article/29/